Applications built on legacy code (like COBOL) have been the Achilles’ heel of companies and governments alike for decades. Maintaining these systems has become increasingly untenable—not just from a cost perspective but also due to increasing risks, making it increasingly difficult to “kick the can down the road.” Inaction on legacy systems is leading to spiraling expenses, operational inefficiencies, and heightened vulnerabilities in cybersecurity and compliance. Which is why many CTOs are now considering legacy code refactoring.

Traditionally, the only way to address legacy code has been through manual refactoring. However, this approach is extraordinarily expensive and time-consuming. Consider the typical payback period: 5-10 years with no added features—just modernization. Moreover, refactoring a single application often takes 1-2 years, making this a nonviable solution for organizations managing hundreds or even thousands of legacy systems.

Enter Generative AI and automated coding. The rapid rise of these technologies has raised reasonable—if oversimplified—questions from senior executives outside the tech domain: “Why can’t Generative AI just fix the legacy code problem?” Most academic researchers in the area understand that it’s not that simple. I’ll delve into this topic in more detail in this dedicated blog, but the short version is that the types of Large Language Models used to automate code translation require a corpus of code from which to learn. And the majority of large legacy applications are proprietary—so neither the legacy code nor the translated code is in the public domain and available to learn from. LLMs are also significantly limited by memory constraints. That doesn’t mean researchers aren’t trying to move past these constraints—they are making progress—but current line-level accuracy rates are only in the 40-60% range for COBOL to Java or C translations.

What many fail to realize is that there’s a better alternative out there. Automated legacy code refactoring tools based on transpiler technology have existed for decades. And transpilers can achieve line-level accuracy of 85-95%+ for COBOL to Java or C translations. In fact, Recodex was founded 49 years ago on this very premise.

What is a transpiler? A transpiler, short for source-to-source compiler, translates code written in one programming language into another. Unlike traditional compilers, which convert code into machine language, transpilers preserve the structure and logic of the original code while adapting it for a new platform or language. This makes them ideal for modernizing legacy systems, as they can automate significant portions of the refactoring process while maintaining functional equivalence.

A review of the latest research on Generative AI exposed to me that the current frameworks being used to evaluate legacy code refactoring are overly academic. The focus has largely been on line-level accuracy, functional accuracy, and human readability (BLEU)—important metrics, but insufficient for addressing the full lifecycle of concerns for CTOs.

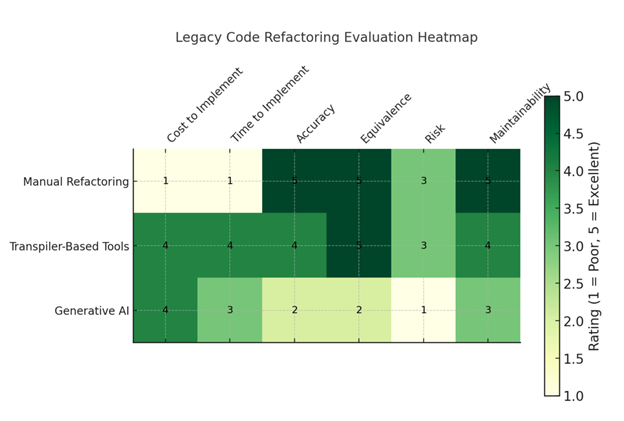

This is where Recodex’s new framework comes in. By aligning with the lifecycle priorities of CTOs, our approach provides a comprehensive way to evaluate legacy code refactoring across six key pillars: Cost to Implement, Time to Implement, Accuracy, Equivalence, Risk, and Maintainability.

1. Cost to Implement

Cost to implement measures the financial resources required to execute the refactoring process. This includes:

Tooling Costs: The price of software or platforms used during refactoring.

Human Resource Costs: Salaries or fees for developers, testers, and other team members involved in the process.

Operational Costs: Overhead costs incurred during the project timeline, such as infrastructure or licenses.

2. Time to Implement

Time to implement measures the total duration required to complete the refactoring process, including:

Preparation Time: Time spent understanding legacy code and configuring tools or teams.

Execution Time: The period required for translating, testing, and refining code.

Deployment Time: Time spent on integrating the refactored system into production environments.

3. Accuracy

Accuracy ensures the syntactic and computational integrity of refactored code. It includes:

Line-level Errors: Measured as the percentage of lines with syntax issues such as unmatched braces, missing semicolons, or invalid keywords. This metric highlights the basic syntactic correctness of the code, with a target of 0% errors.

Compilability: A binary metric (yes/no) that determines whether the refactored code compiles successfully without errors. Tools like static analysis and compilers are used for evaluation.

Computational Accuracy: Measured as the percentage of passed functional test cases (“@ Pass N%”). It evaluates whether the code executes correctly and produces the expected results for all scenarios, with tolerance for minor logical errors (e.g., slight miscalculations).

Traditional approaches emphasize this area, but accuracy alone is insufficient to guarantee a successful refactor.

4. Equivalence

Equivalence ensures that refactored code faithfully reproduces the original functionality, including:

Missed Business Requirements: A binary metric (yes/no) that evaluates whether all critical business requirements have been translated into the new codebase. This ensures no functionality gaps, as any missed requirement can result in severe business disruptions.

Functional Equivalence: Measured as the percentage of scenarios where the refactored code’s behavior matches the original code. This includes edge cases and ensures consistency in outcomes across all inputs, with minor deviations tolerated for runtime differences.

Missed Business Requirements, in particular, represent a high-stakes risk area that is often underestimated in traditional evaluations.

5. Risk

Risk addresses vulnerabilities and uncertainties in the refactoring process:

Code Security: Measured on a scale (High/Medium/Low) to assess the likelihood and impact of vulnerabilities such as proprietary data leaks or cybersecurity breaches. This includes evaluating access controls, encryption practices, and code exposure risks.

Long-tail Errors: Measured on a scale (High/Medium/Low) based on the ability to identify and address rare edge cases through techniques like stress testing, fuzz testing, and input simulations. This metric helps evaluate how well the code handles unexpected inputs or unanticipated workflows.

Unlike accuracy and equivalence, risk factors require proactive strategies to ensure business continuity and operational resilience.

6. Maintainability

Maintainability focuses on the long-term sustainability of the codebase:

Human Readability: Measured using BLEU scores, which compare the refactored code’s readability and adherence to coding standards against a baseline. This ensures the code remains understandable and maintainable for developers.

Scalability and Modifiability: While not explicitly quantified, maintainability includes qualitative assessments of how easily the code can be extended or modified for future needs. Tools and manual reviews assess adherence to best practices for modularity and clarity.

While maintainability is a cornerstone of academic research, it should be balanced against the immediate business and security risks highlighted above.

When considering how to modernize legacy systems, organizations typically choose between three main approaches: manual refactoring, transpiler-based tools, and generative AI-driven methods. Each of these approaches has its strengths and weaknesses when evaluated across the six pillars: Cost to Implement, Time to Implement, Accuracy, Equivalence, Risk, and Maintainability.

Manual Refactoring

Manual refactoring remains the most traditional approach to modernizing legacy systems. However, it is highly resource-intensive. Cost to Implement is very high due to reliance on skilled developers who must analyze and translate code line by line, often making it 5-10 times more expensive than transpiler-based solutions. Similarly, Time to Implement is the longest of the three approaches, with a single application often taking a year or more to complete.

On the positive side, manual refactoring excels in Accuracy. Line-level error rates are nearly nonexistent because human developers can ensure strict syntax compliance and computational correctness. Additionally, Equivalence is well-addressed, as developers can manually tailor the refactored code to preserve all business requirements and ensure functional fidelity. However, Risk is higher than often acknowledged. Many legacy systems contain undocumented workarounds and implicit functionality developed over decades. These are easily missed in a manual rebuild, potentially introducing critical long-tail errors. Maintainability is a strength of this approach, as manually crafted code is typically idiomatic, modular, and easy to modify in the long term.

Transpiler-Based Tools

Transpiler-based tools offer a more efficient and cost-effective alternative. Cost to Implement is significantly lower than manual refactoring, at roughly one-fifth to one-tenth of the cost, as much of the work is automated. Time to Implement is also much shorter, typically requiring only 1-2 months for a single application, thanks to automation and pre-built syntax trees.

Transpilers excel in Accuracy, with line-level error rates below 5%. These tools also guarantee Functional Equivalence by leveraging syntax trees to preserve the structure and logic of the original code, ensuring that even undocumented legacy workarounds are retained. This makes transpilers particularly well-suited for modernizing older, complex systems. Risk is moderate, as automated tools can occasionally struggle with proprietary data or edge cases, but these issues are usually manageable with post-processing. Maintainability is good but not as high as manual refactoring, as transpiler-generated code can sometimes lack idiomatic clarity.

Generative AI Methods

Generative AI is an experimental but increasingly popular approach. Cost to Implement is similar to transpiler-based tools, due to comparable tooling and computational resource requirements. However, Time to Implement is longer, as generative models produce translations with error rates of 20-40%, requiring extensive post-processing and validation to achieve functional accuracy.

Generative AI’s Accuracy is lower compared to transpilers, as it struggles with complex syntax and logical correctness. While the generated code often appears polished and readable, it may lack functional integrity. Equivalence is not guaranteed, as implicit logic and nuanced business requirements are frequently misinterpreted, leading to significant gaps in functionality. Risk is particularly high for generative AI, especially in environments without private cloud infrastructure. Cybersecurity vulnerabilities and the potential for introducing long-tail errors through hallucination are major concerns. However, Maintainability may be a relative strength, as the generated code often follows modern conventions and appears clean, though it may still lack underlying accuracy and robustness. Clikc here for

Refactoring legacy code is not just a technical challenge; it’s a balancing act that requires a full understanding of a CTO’s priorities. CTOs must weigh the costs and timelines of each approach against their organization’s tolerance for risk and error. They don’t have unlimited resources or time, nor can they afford to compromise on functional equivalence or introduce vulnerabilities into critical systems.

Manual refactoring offers unparalleled accuracy and maintainability but comes at a prohibitive cost and time investment, with the added risk of overlooking undocumented workarounds. Transpiler-based tools strike a compelling balance, delivering high accuracy and guaranteed functional equivalence at a fraction of the cost and time. Generative AI, while promising, remains a high-risk option due to its lower accuracy, lack of guaranteed equivalence, and potential security vulnerabilities.

Ultimately, the right choice depends on the specific constraints and objectives of the organization. By understanding the trade-offs across all six pillars—Cost to Implement, Time to Implement, Accuracy, Equivalence, Risk, and Maintainability—CTOs can make informed decisions that align with their strategic goals.

At Recodex, we’re committed to helping CTOs navigate these complex decisions with tools and methodologies that address the full lifecycle of their concerns. With our framework, you can approach refactoring with confidence, ensuring smarter, safer, and more strategic outcomes. To learn more about our offerings contact us.